Alerting

If you are using Conductor, you can configure automatic alerts when certain failure conditions are met.

Alerts require at least a DBOS Teams plan.

Creating Alerts

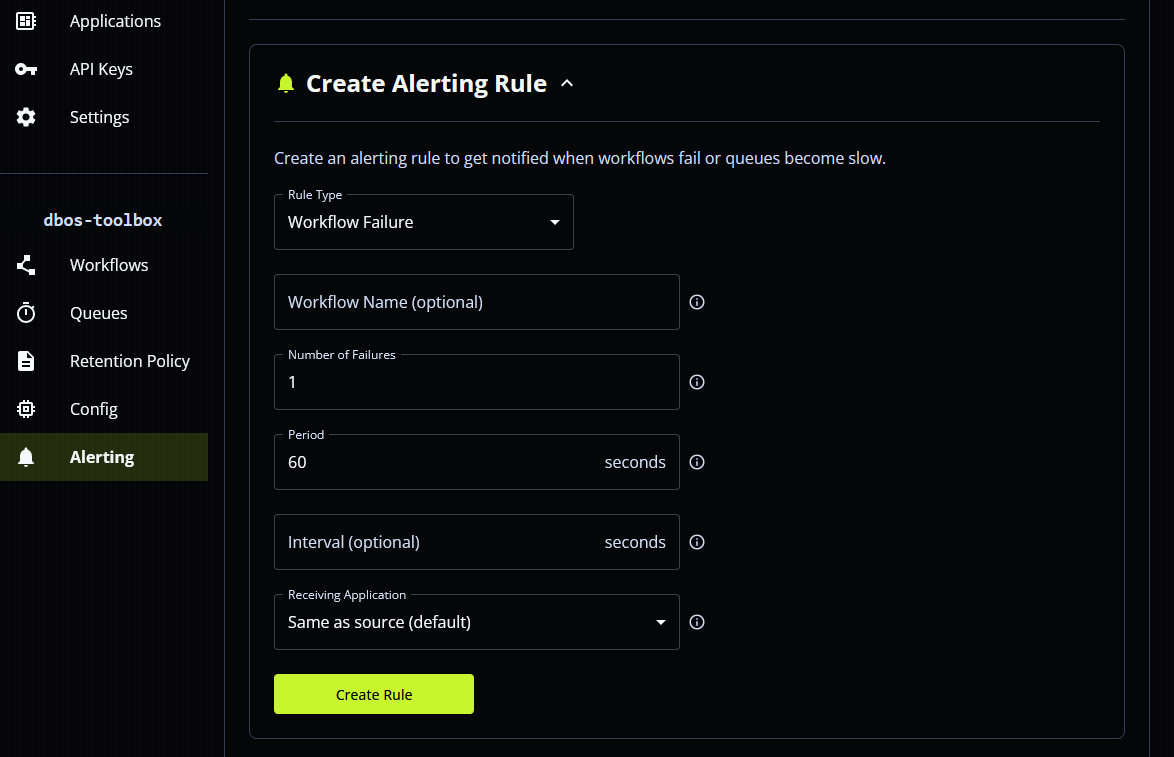

You can create new alerts (or view or update your existing alerts) from your application's "Alerting" page on the DBOS Console.

Currently, you can create alerts for the following failure conditions:

- If a certain number of workflows (parameterizable by workflow type) fail in a set period of time.

- If a workflow remains enqueued for more than a certain period of time (parameterizable by queue name), indicating the queue is overwhelmed or stuck.

- If an application is unreponsive (no connected executors, or connected but unresponsive executors).

You may also specify an application to receive the alert—this does not need to be the same as the application that generated the alert. For some failure conditions (e.g., unresponsive application), the application receiving the alert is required to be different from the one generating it.

Receiving Alerts

You can register an alert handler in your application to receive alerts from Conductor. Your handler can log the alerts or forward them to another system, such as Slack or PagerDuty.

Only one alert handler may be registered per application, and it must be registered before launching DBOS. If no handler is registered, alerts are logged automatically.

The handler receives three arguments:

-

rule_type: The type of alert rule. One of

WorkflowFailure,SlowQueue, orUnresponsiveApplication. -

message: The alert message.

-

metadata: Additional key-value string metadata about the alert. The metadata keys depend on the rule type:

WorkflowFailure:workflow_name: The workflow name filter, or*for all workflows.failed_workflow_count: The number of failed workflows detected in the time window.threshold: The configured failure count threshold.period_secs: The time window in seconds.

SlowQueue:queue_name: The queue name filter, or*for all queues.stuck_workflow_count: The number of workflows that have been in the queue for longer than the time threshold.threshold_secs: The enqueue time threshold in seconds.

UnresponsiveApplication:application_name: The application name.connected_executor_count: The number of executors currently connected to this application.

- Python

- Typescript

Example logging alerts:

from dbos import DBOS

@DBOS.alert_handler

def handle_alert(rule_type: str, message: str, metadata: dict[str, str]) -> None:

DBOS.logger.warning(f"Alert received: {rule_type} - {message}")

for key, value in metadata.items():

DBOS.logger.warning(f" {key}: {value}")

Example forwarding alerts to Slack using incoming webhooks

@DBOS.alert_handler

def handle_alert(rule_type: str, message: str, metadata: dict[str, str]) -> None:

webhook_url = os.environ.get("SLACK_WEBHOOK_URL")

slack_text = f"*Alert: {rule_type}*\n{message}\n" + "\n".join(f"• {k}: {v}" for k, v in metadata.items())

try:

resp = requests.post(webhook_url, json={"text": slack_text}, timeout=10)

resp.raise_for_status()

except requests.RequestException as e:

DBOS.logger.error(f"Failed to send Slack alert: {e}")

Example forwarding alerts to PagerDuty using the Events API:

@DBOS.alert_handler

def handle_alert(rule_type: str, message: str, metadata: dict[str, str]) -> None:

routing_key = os.environ.get("PAGERDUTY_ROUTING_KEY")

payload = {

"routing_key": routing_key,

"event_action": "trigger",

"payload": {

"summary": f"{rule_type}: {message}",

"severity": "error",

"source": "my-app",

"custom_details": metadata,

},

}

try:

resp = requests.post(

"https://events.pagerduty.com/v2/enqueue",

json=payload,

timeout=10,

)

resp.raise_for_status()

except requests.RequestException as e:

DBOS.logger.error(f"Failed to send PagerDuty alert: {e}")

See the Python reference for more details.

Example logging alerts:

DBOS.setAlertHandler(async (ruleType: string, message: string, metadata: Record<string, string>) => {

DBOS.logger.warn(`Alert received: ${ruleType} - ${message}`);

for (const [key, value] of Object.entries(metadata)) {

DBOS.logger.warn(` ${key}: ${value}`);

}

});

Example forwarding alerts to Slack using incoming webhooks

DBOS.setAlertHandler(async (ruleType: string, message: string, metadata: Record<string, string>) => {

const webhookUrl = process.env.SLACK_WEBHOOK_URL!;

const slackText = `*Alert: ${ruleType}*\n${message}\n` +

Object.entries(metadata).map(([k, v]) => `• ${k}: ${v}`).join("\n");

const resp = await fetch(webhookUrl, {

method: "POST",

headers: { "Content-Type": "application/json" },

body: JSON.stringify({ text: slackText }),

});

if (!resp.ok) {

DBOS.logger.error(`Failed to send Slack alert: ${resp.status}`);

}

});

Example forwarding alerts to PagerDuty using the Events API:

DBOS.setAlertHandler(async (ruleType: string, message: string, metadata: Record<string, string>) => {

const routingKey = process.env.PAGERDUTY_ROUTING_KEY!;

const payload = {

routing_key: routingKey,

event_action: "trigger",

payload: {

summary: `${ruleType}: ${message}`,

severity: "error",

source: "my-app",

custom_details: metadata,

},

};

const resp = await fetch("https://events.pagerduty.com/v2/enqueue", {

method: "POST",

headers: { "Content-Type": "application/json" },

body: JSON.stringify(payload),

});

if (!resp.ok) {

DBOS.logger.error(`Failed to send PagerDuty alert: ${resp.status}`);

}

});

See the TypeScript reference for more details.